Earlier this year NVIDIA announced their latest product that will join their existing lineup of DGX Station™, DGX-1 and DGX-2. The team at Boston Labs were excited to finally get their hands on the brand new DGX™ A100 and begin testing.

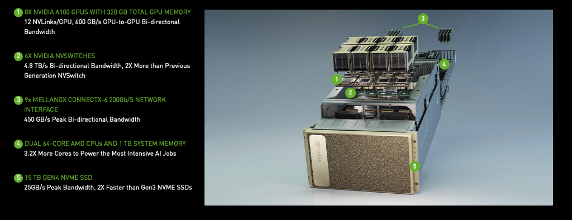

For those that are not aware - the NVIDIA® DGX™ A100 is the universal system for all AI workloads. It integrates 8 of the world's most advanced NVIDIA A100 Tensor Core GPUs, delivering the very first 5 petaFLOPS AI system. The NVIDIA A100 GPU delivers unparalleled acceleration at every scale for AI, data analytics and high-performance computing (HPC) to tackle the world's toughest computing challenges! The A100 GPU offers 7x higher throughput than V100 and with simultaneous instances per GPU, you have the flexibility to run GPUs independently or as a whole.

For some more in depth specs, we recommend reading more at our dedicated NVIDIA DGX A100 product pages here.

Graphic courtesy NVIDIA - https://www.nvidia.com/en-gb/data-center/dgx-a100/

A few weeks ago the Boston Labs team were working hard installing and preparing our unit ready for our close partners and key customers to begin proof of concept testing - here's how the unit was deployed and some highlights of the interesting features that NVIDIA baked in along the way.

A guided tour of the DGX A100 and installation...

Note - Installing the DGX A100 requires both training and experience - therefore it's recommended to leave it to our NVIDIA certified professional installations team. For example, due to the high density and feature packed unit specification the weight is a not insubstantial 143 kilograms - roughly that of two average people. For that reason, a server lift is necessary for installation and should not be attempted without one.

Don't try to be a hero - leave it to the professionals and stay safe. Always follow H&S policy and use a server lift when necessary.

Packing & Unboxing

Our DGX A100 was delivered in a palletised box due to the system size and weight for safe transport via carrier. This really is precious cargo, so we want it to be safe from shock and mishandling throughout the life cycle.

DGX A100 as delivered

Here we see the opened palletised box with the protective upper layers and anti-static plastic bag removed. The unit is ready to rack but with the trademark golden bezel removed. The bezel is shipped separately in the accessory pack to keep it safe.

The DGX A100 unboxed

A box containing the bezel, accessories and rails sits atop the system in the box. Included in the accessories are quick start guides, power cables and an NVIDIA branded USB thumb drive containing restore software - another nice touch (until somebody borrows it of course!)

Underneath the accessory layer sits the rackmount kit which is used to hold the server in place in the rack at a fixed position. Naturally, because the laws of physics exist, sliding rails aren't something which is available for the DGX A100 as rack toppling is a real danger if appropriate precautions aren't taken.

Front bezel, accessories & rails

As you can see, the unit occupies 6U of rack space. Prior to bezel installation, we can clearly see the front accessible features. There are 8 x front mounted high performance cooling fan caddies with chunky grab handles for easy removal, a power button and LED indicators features to the right and 8 x 2.5" NVMe disk bays are spread across the lower portion of the chassis.

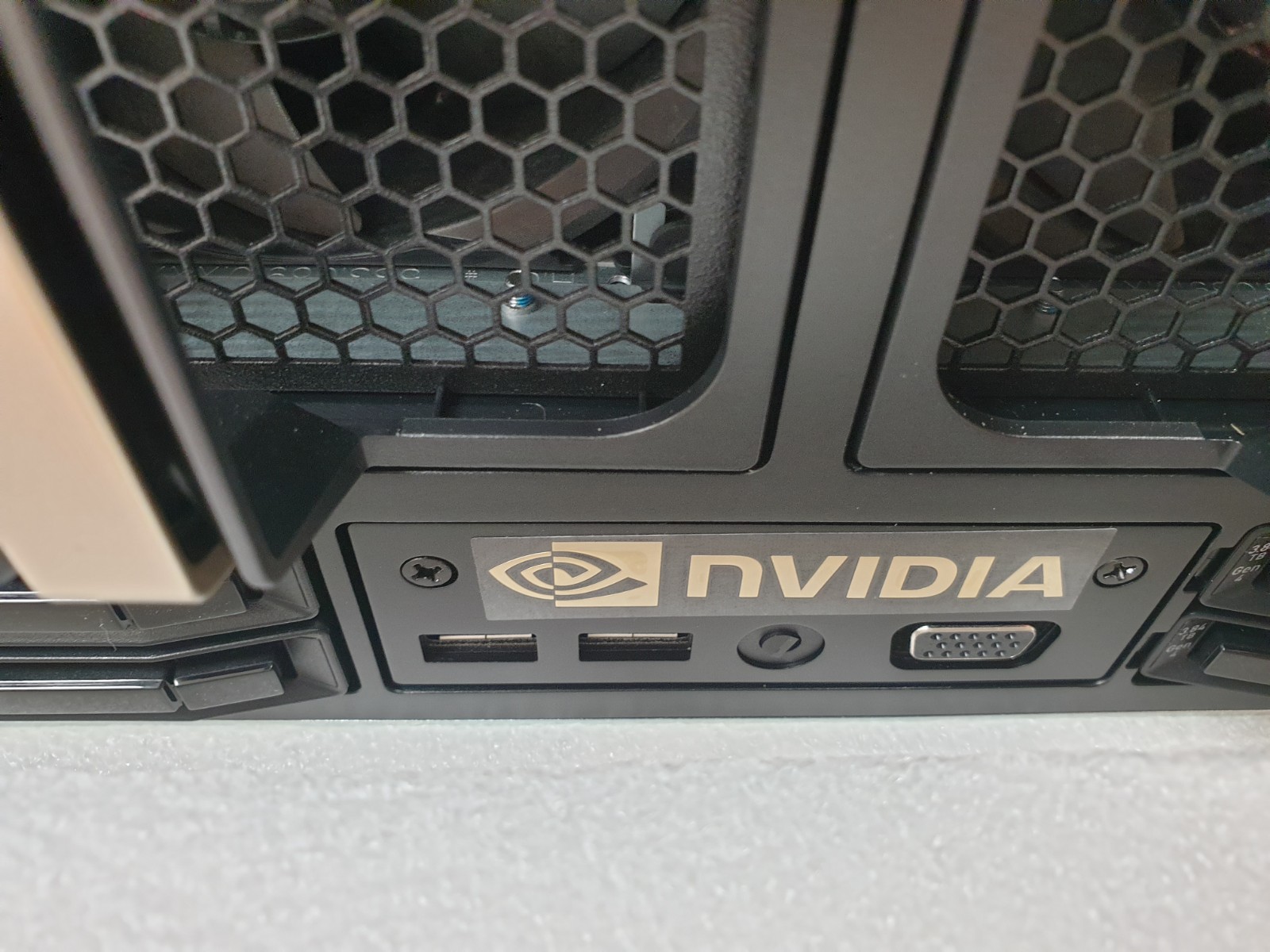

Hidden behind the bottom foam strip in the below image are the USB and VGA connectors located on the bottom of the enclosure for crash cart/KVM connectivity in the cool aisle - a nice touch for the comfort of those who will work on the system in the datacentre. Being able to connect a console to the front or rear of the unit means you can choose to stay away from the noisy, blowy & hot aisle - the preference of most engineers.

Front USB and VGA for KVM in the cool aisle

A close up of one of the NVMe caddies populated with a Samsung PM1733 3.84 - a very respectable choice for high performance and capacity storage. These devices are used in RAID 0 for the Data Cache only.

The drive trays used are clearly labelled with their respective capacity and PCI-Express generation and also have a clear set of LED indicators for activity, failure and identification purposes.

The front mounted cooling fan caddies are released with a button located at the top of the handle. Once released, the fans can be pulled out to the front replaced whilst the system is running but be careful not to put fingers near the blades or operate the fan without a guard.

Each individual caddy houses 2 x 92mm fans for maximum air throughput and some redundancy. Overall, the DGX A100 has a CFM output of 840 at 80% of the fan's PWM setting. As you can imagine this is suited only to a datacentre and not something that anyone would want under your desk.

Another nice touch is an LED indicator built into the top right corner of the fan caddy to help identify specific units in the unlikely event that maintenance is required.

Warning - cooling fans can cause injury and be dangerous if not handled properly - be sure to read the manual and always handle with care.

The rear of the DGX hosts a variety of ports plugs and access panels ready to plumb into the power and networking infrastructure of your rack or datacentre. There are 3 defined sections - the GPU tray, the MB tray (IO) and the power supply plane. The power supplies and both the CPU/GPU sections are removable for servicing purposes. Internally there is a midplane which connects each of these sections together for high speed data and power transfer - an impressive feat of electronic engineering.

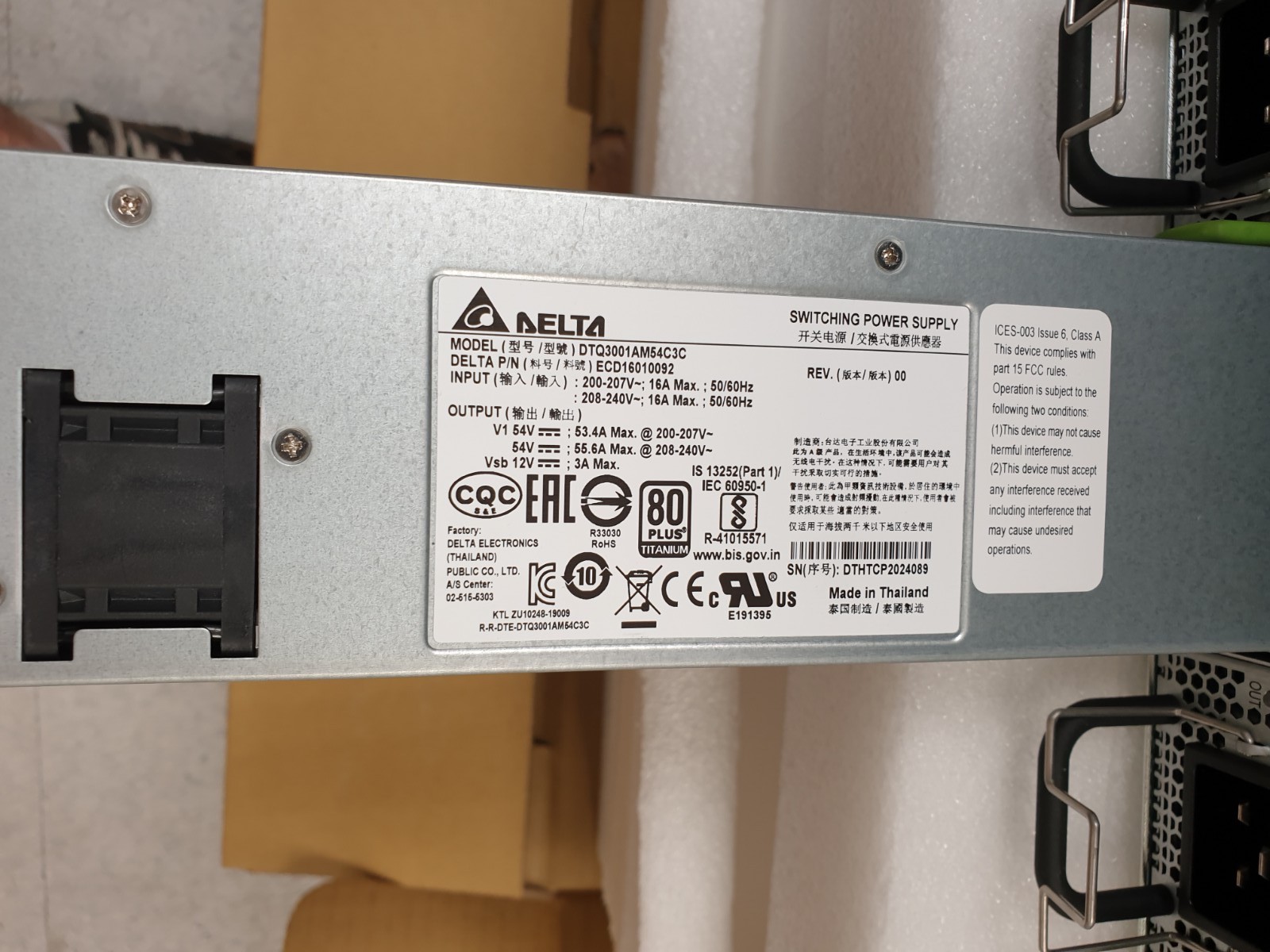

Starting from the bottom there are 6 x C20 inlet connectors for the power supplies AC input. These feed the Delta 3kW PSU's and allow for 3+3 redundancy and easily cover the 6.5kW maximum power required to keep the system up in the event of even 2 PSU's disconnected or failing. Perfect for A/B feed power feeds. NVIDIA provide power cables with locking connectors too to help reduce incidents of power cords coming loose from repeated contact/pull from other cables or being pulled out by accident.

Sneakily hidden next to PSU 0 on the far left is the system serial number/asset tab - should a quick check for identification or warranty purposes be necessary.

.png)

A close up of the Delta 3kW PSU

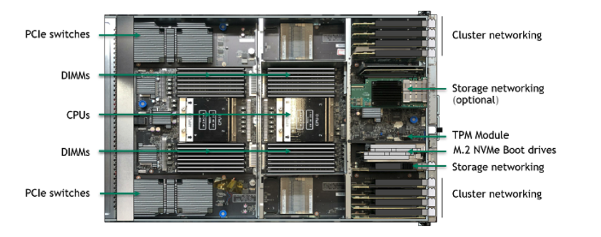

Above the power supply plane, the motherboard tray hosts the add in cards and general IO port segments.

Our DGX is fitted with 10 x 200Gbit/s Mellanox Connect-X 6 VPI InfiniBand/Ethernet ports spread across 8 x single port controllers for inter cluster bandwidth and one dual port controller for storage connectivity. An additional 2 dual port cards can be added to help satisfy those with huge storage bandwidth requirements - ideal for NVMeOF platforms such as the Igloo Talyn.

In the centre of the slot arrangement dual 1.92TB M.2 NVMe storage devices in software RAID 1 are included for the operating system, enabling the U.2 devices mounted in the hot plug bays at the front to be reserved for data and caching as necessary.

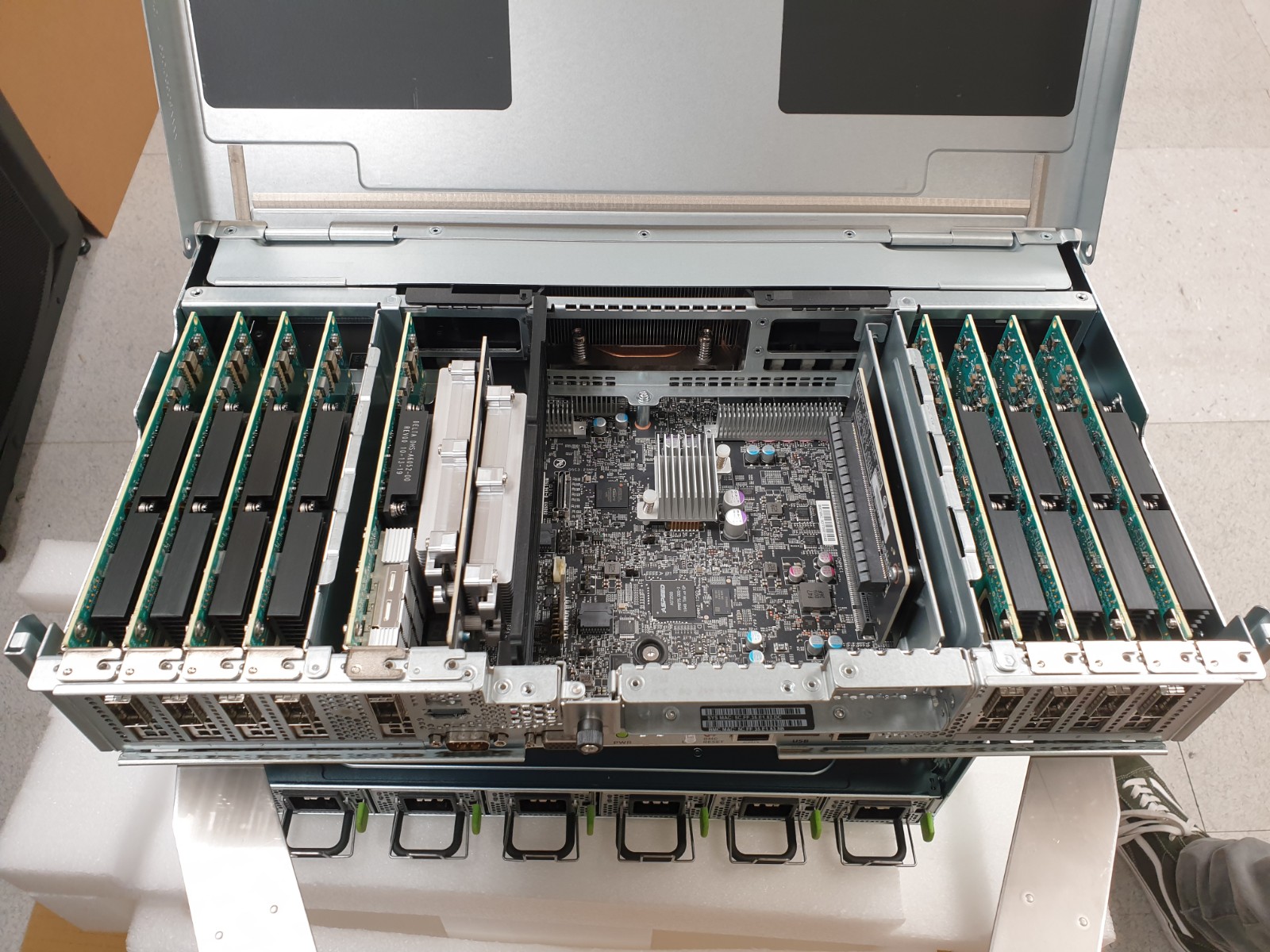

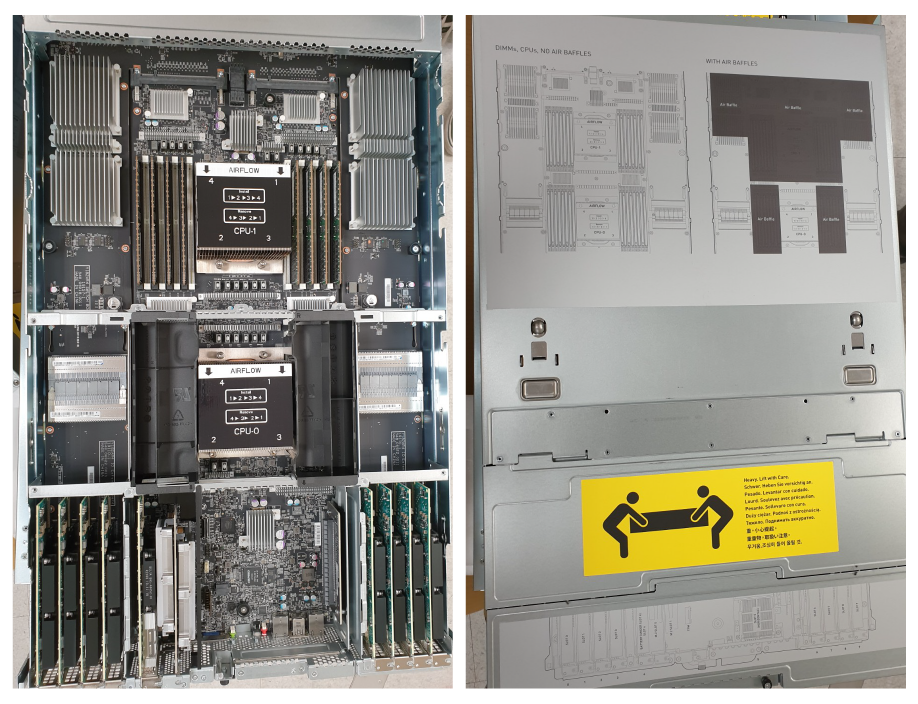

The motherboard tray can be removed by unscrewing two green thumb screws and opening the release levers. When slid out from the rear of the chassis, the motherboard tray lid can be opened in two places. One section allows access to the IO and add in card area and the second section enables access to the rest of the motherboard with access to the CPUs and memory.

Motherboard tray IO and AOC access - a convenient hinge the panel in place but allows free access.

The second portion of the motherboard tray has a more traditional removable top panel which is conveniently provided with a schematic of the layout to aid servicing and trouble shooting.

In the centre there is a clear channel of air flow for the dual AMD EPYC™ 7742 processors and the accompanying memory. Either side are PCI Express switches to connect the various network controllers to the system. Typically, an AMD EPYC™ dual processor platform will have up to 128 lanes of PCI-Express, however with 10+ networking cards of 16 lanes each and numerous other devices there needs to be some lane management to enable all to connect.

Sitting above the MB tray is the GPU tray, which from the rear we can only see the vented panel for the GPU cooling exhaust. The segmentation of the design aids air flow and enables a clear unobstructed path for clean air to cool the GPUs rather than pre-heated air from the CPU heatsinks or add in cards - commonly found in many other system designs.

From the bottom we can see the tray's connectors to the system midplane then, above the 6 NVSwitch silicon and their attached heatsinks. Finally, we can see where the money is really spent - the impressive gold coloured heatsinks covering the most important part of the DGX - the 8 x Tesla A100 GPU's themselves. As SXM4 modules each GPU has massive amounts of bandwidth for intercommunication - 12 NVLinks per GPU which equates to 600GB/s in both directions. To put that in perspective - a PCI Express x16 Generation 4 link gives 32GB/s and is currently the best available interconnect option for most add in cards (including many NVIDIA GPU's) - that's 19 times faster.

With our internal tour over, it's time to physically rack the system ready for bring up and application installation. Our system is being placed at the bottom of one of our lab racks.

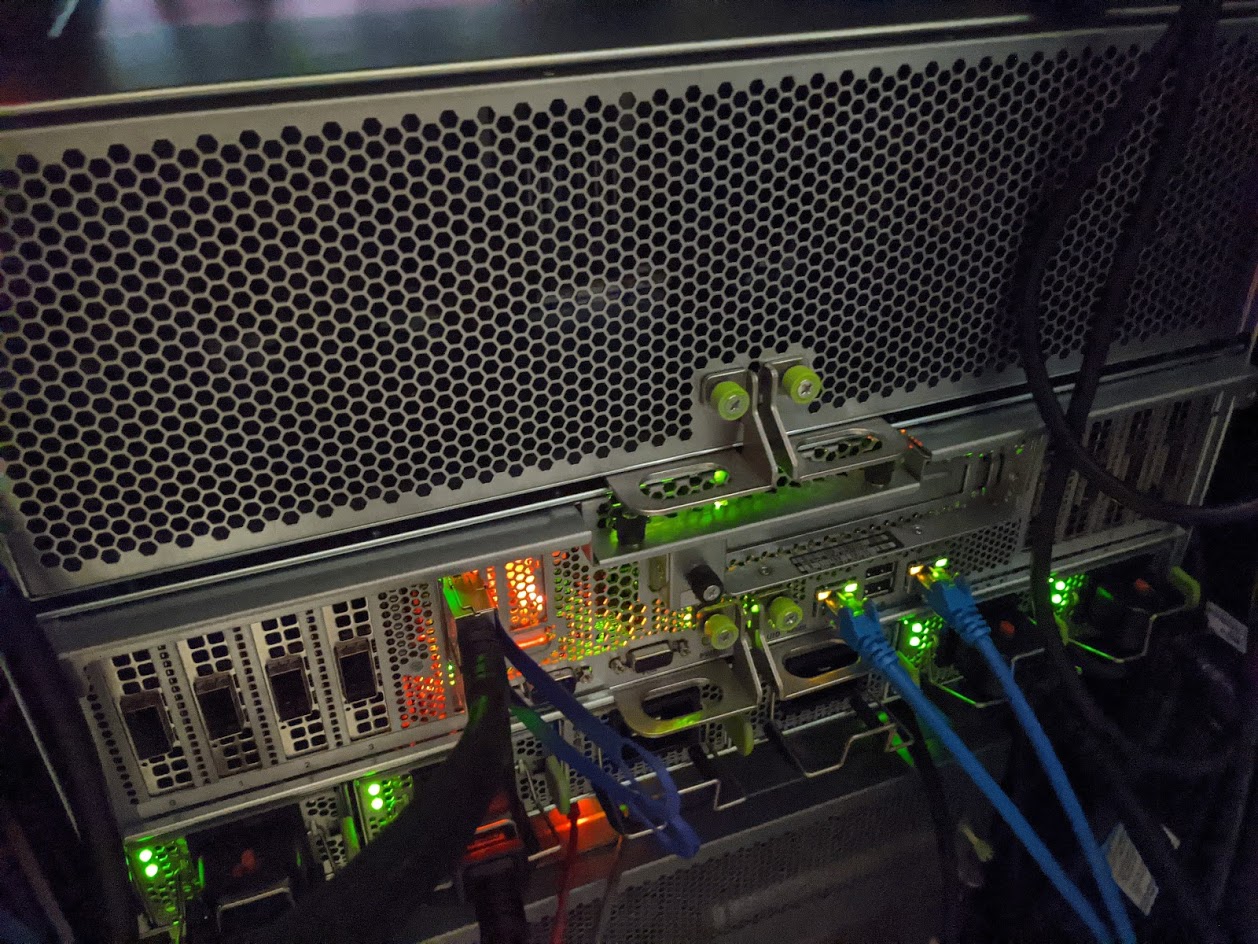

Power, console and networking are connected ready for bring up. In our case, we will only connect the storage network for now as we are working on a standalone DGX A100 but have plans to scale later.

Our Flash IO Talyn and DDN EXA5 Lustre storage units are already connected to our SDN Mellanox Connect-X 6 Switch for our existing DGX-2 deployment so we're ready to go ahead to bring them online and begin importing our models and training data at lightning 100 and 200Gbit/s interconnect speed.

TOR Switch connected

With the unit installed, it's simply a case of installing the beautiful golden front bezel and powering the system up. Our HPC and Deep Learning/AI training team have been waiting with bated breath to get some time on the DGX A100 and gain insight into how it can accelerate our customer workloads. Stay tuned for a follow up article covering some of the results of their testing which details applications that benefit from the technical enhancements of Ampere and the architecture this exciting DGX.

Boston Labs is all about enabling our customers to make informed decisions to enable them to select the right hardware, software and overall solution for their specific challenges. Please do get in contact and apply for a test drive of the DGX A100 or any of the great technology which we showcase from our close partners at NVIDIA, Intel, AMD, Mellanox and many more - we look forward to hearing from you all.

Written by:

Dan Johns, Head of Technical Services at Boston Limited